Does it make good business sense to outsource diaster recovery? Yes, and there are compelling reasons to do so. Read more…

Disaster Recovery

Webinar: The Importance of a Business Impact Analysis

The following is a lightly edited transcript of our September 2022 webinar on the importance of Business Impact Analysis. The speakers are: Kelly Culwell, Senior Manager, Service Transition, Dataprise Steven New, Director of Operations, Dataprise Tom Shay, vCIO,...

Why RPO and RTO are so important to effective disaster recovery

Business continuity is at the forefront of most IT departments. Between human error and hardware failure, no environment is completely free of risk. And with 236.1 million ransomware attacks worldwide during the first half of 2022, odds are that your organization will...

Webinar: How DRaaS Works in a Crisis

This cybersecurity webinar looks at why Microsoft 365 backup is critical and 7 ways doing so will save you time, money, and stress.

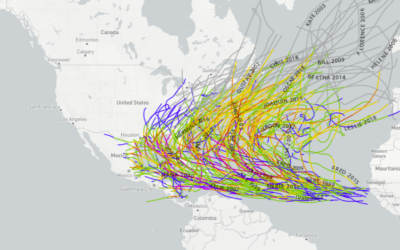

Is your company in hurricane territory? Do these things to protect your data

For much of the country, there are six months of the year where businesses are at risk of the damaging effects of a hurricane. The Atlantic hurricane season for 2022 runs from June 1 until November 30th and while so far it’s been a relatively quiet season, earlier...

Should I Outsource Disaster Recovery?

Does it make good business sense to outsource diaster recovery? Yes, and there are compelling reasons to do so. Read more…

Veeam Backup & Replication v12 Features Take Modern Data Protection to the Next Level

Veeam® is a recognized industry leader in modern data protection, with a solutions suite continuously updated to protect businesses from accidental data loss and emerging cyber threats. With its upcoming release of Backup & Replication™ v12, Veeam is again...

Webinar: Data Protection Trends 2022

Jason Buffington, VP of Solution Strategy for Veeam® Software, and Kelly Culwell of Global Data Vault discuss the Data Protection Trends 2022 report.

Define data movers, transport services, and bottleneck detectors?

Veeam Backup & Replication (VBR) detects performance issues during job processing, which are not always evident. Understanding these bottlenecks allows you to troubleshoot latency quickly.

Dataprise Expands its DRaaS and Data Protection Offerings with Acquisition of Industry Leader Global Data Vault

Dataprise, a leading strategic IT managed service provider, today announced the acquisition of Global Data Vault, a leader in DRaaS, BaaS and modern data protection solutions.