by Brian Brignac | Jan 22, 2013 | Archived, Disaster Recovery

Cloud Storage Services

There’s a lot of buzz around cloud services. Everything from accounting to telecommunications to everyday computing with virtual desktops, it’s all moving to the cloud. It’s not the wave of the future – it’s what’s happening now.

Companies are embracing the cloud in droves, seeing the advantages over traditional, read “antiquated,” means of competing on the big stage. Our little slice of the Internet is intimately involved with cloud disaster recovery, and while it’s the norm at Global Data Vault, we realize that many companies are still warming to the idea that there’s something more secure and nimble than traditional disaster recovery solutions.

In the event you’re evaluating whether a leap to the cloud is right for you, we’ll take a look at the key characteristics and benefits of Cloud Disaster Recovery vs. traditional Disaster Recovery Solutions below. (more…)

by Brian Brignac | Nov 16, 2012 | Archived, Disaster Recovery, Disaster Studies

“Not only were many firms unsure about whether their galoshes were waterproof,” Bart Chilton, the regulator at the Commodity Futures Trading Commission said, “they hadn’t even tried them on.”

Chilton’s sharp-tongued critique is in response to the apparent lack of disaster preparation in the days prior to the Super Storm Sandy.

Chilton is still hot about the ability of Hurricane Sandy to take the New York Stock Exchange to its knees. Unable to shift their servers to an emergency backup platform, the NYSE stayed dark because several trading firms had never tested their recovery strategy or their connectivity with the data backup system.

“Now that we have this stark and frankly frightening example to work from, we’d be negligent not to move quickly to make sure that our emergency systems are comprehensive and fully tested,” says Chilton. He added his intent to urge financial firms and federal authorities to coordinate action plans in the face of future calamities. His suggestion is to call for the creation of a private-public task force that would convene in advance of crises like hurricanes. Chilton said the group would mandate technology testing protocols, spell out guidelines for operating in a crisis and potentially sketch a timetable for recovery efforts.

Chilton’s agency, the Commodity Futures Trading Commission is tasked with ensuring the open and efficient operation of the futures markets — so you can understand why this was such a black eye for the CFTC.

And on the heels of CFTC’s other recent foray into the spotlight with the investigation into MF Global and their supposed improper transfer of roughly $1.2 billion in customer money to pay its own bills. CFTC is actively examining MF Global’s then Chairman and CEO Jon S Corzine for accelerating the firm’s $40 billion demise. (Corzine is a former New Jersey Governor and New Jersey Senator).

While the precipitating reason for Chilton’s galoshes rant is disturbing, it’s also a cornerstone moment for our company who’s foundation of data protection is frequent testing. The Global Data Vault point of differentiation from many of our peers is that Global Data Vault conducts quarterly testing of our customer’s systems – precisely so a Super Storm Sandy can’t take them out of the running for several days. Our silver lining of the whole North East mess is that the galoshes are now part of the data protection conversation.

If you have questions about the readiness of your galoshes, contact us today.

by Brian Brignac | Nov 12, 2012 | Disaster Recovery, Disaster Studies

Hurricane Sandy provided a fascinating opportunity to study the both the level of disaster planning and the resilience of New York City data centers. This article will examine a) what actually happened, b) what was the risk, and c) what are the lessons learned.

What Actually Happened?

Simply put, data centers in New York were caught off guard. Consider these incidents.

Internap and Peer 1, located at 75 Broad Street, suffered basement-level flooding which knocked out diesel fuel pumps.

Datagram, located at 33 Whitehall, experienced the exact same problem – 5 feet of water in the basement. As a result several high profile blogs and numerous websites went dark.

Both of these facilities are located a Zone A flood zone. Zone A is FEMA’s second highest risk category.

Then there were fuel supply issues. Fog Creek who makes and hosts Trello, Copilot and other popular platforms is in Peer 1 had to assemble a bucket brigade to carry diesel fuel up 17 stories to refuel a generator at Peer 1. As a precaution Trello was moved to Amazon Web Services and it seems to have suffered limited downtime, but the bucket brigade was required.

Shoretel, the VoIP provider, had 3 data centers – all in lower Manhattan, including 75 Broad St which did successfully switch over to generator power but due to “city restrictions” they had shut the generators down. 700 customers went down.

Fortunately, things did not get worse for Fog Creek, but carrying 5 gallon buckets of diesel fuel up 17 stories in a building with power problems strikes us as a recipe for something truly horrible.

Teams from Squarespace fill buckets with diesel fuel to haul them up 17 stories to the generator keeping the data center online. Staff from Peer 1, Squarespace and Fog Creek Software have formed this unusual Internet bucket brigade. (Photo via Squarespace)

Teams from Squarespace fill buckets with diesel fuel to haul them up 17 stories to the generator keeping the data center online. Staff from Peer 1, Squarespace and Fog Creek Software have formed this unusual Internet bucket brigade. (Photo via Squarespace)

A typical rack of servers requires 5 to 10 KW of power including cooling/HVAC. Typical data centers range in size from 5,000 to 40,000 square feet. A mid-sized facility at 20,000 SqFt would house about 600 racks. That equates to roughly 5 megawatts (MW) of power. A reasonably efficient diesel generator would require roughly 200 gallons of diesel per hour to push out 5 megawatts – that’s a bit over 3 gallons per minute.

Typically data centers tell us they have 1 week of diesel onsite and a resupply contract. A full week for a 20,000 SqFt data center is 34,000 gallons. We suspect that in lower Manhattan, the standard was more like 1 day. Then resupply problems hit because of the street flooding, and road and bridge closures.

What was the Risk?

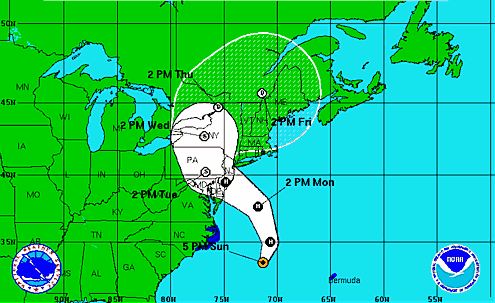

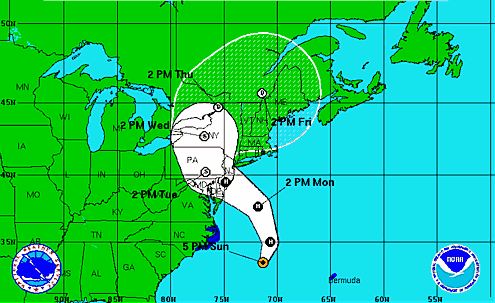

The Mid-Atlantic States do not see nearly as many hurricanes as the Southeast and the Gulf Coast of the United States. The average return period for hurricanes within 50 miles of New York City is 18 to 19 years.

For the largest part of Hurricane season the Typical Hurricane Tracks, as observed by NOAA, take these storms out to see at the more northern latitudes of the NYC area.

Here are the July, August and September typical tracks:

But look at how this changes in October:

And notice how closely Hurricane Sandy lined up with the typical October track.

Finally, what about the frequency of storm origin in October? Compare below the frequency map for August 21 – 31 origin, which is the peak of Hurricane Season, to the October 11 – 20 origin map below:

You can see that activity is less in October, but it’s hardly dormant as it is a few weeks later:

Just as August and September are the periods of greatest risk in the Southeast and the Gulf Coast, October clearly presents the greatest risk of hurricanes in NYC.

What is the solution?

If these providers had built to the following standards, downtime would have been minimized:

- One week of fuel for standby power onsite

- Resupply plan for fuel in place – or

- A redundant or backup site more than several hundred miles away

For any disaster recovery, hosting or colocation solution, we would look to the Uptime Institute who publishes the Data Center Site Infrastructure Tier Standard for Operational Sustainability.

Based on their standard, we’d offer the following. Red indicates higher risk profile of Lower Manhattan.

| Disaster Risk Component |

Higher Risk |

Lower Risk |

| Flooding and Tsunami |

< 100 Year Flood Plain |

> 100 Year Flood Plain |

| Hurricanes and Tornadoes |

High |

Medium |

| Seismic Activity |

Zone 3 or 4 |

Zone 2A or 2B |

| Airport/Military Airfield |

< 3 miles from active runway |

> 3 miles from active runway |

| Adjacent Properties |

Chemical plant, etc. |

Office buildings, land |

| Transportation Corridors |

< 1 mile |

> 1 mile |

To review your site’s risk of various natural disasters, see our Natural Disaster Risk Maps.

by Brian Brignac | Nov 9, 2012 | Archived, Cybersecurity, Disaster Recovery

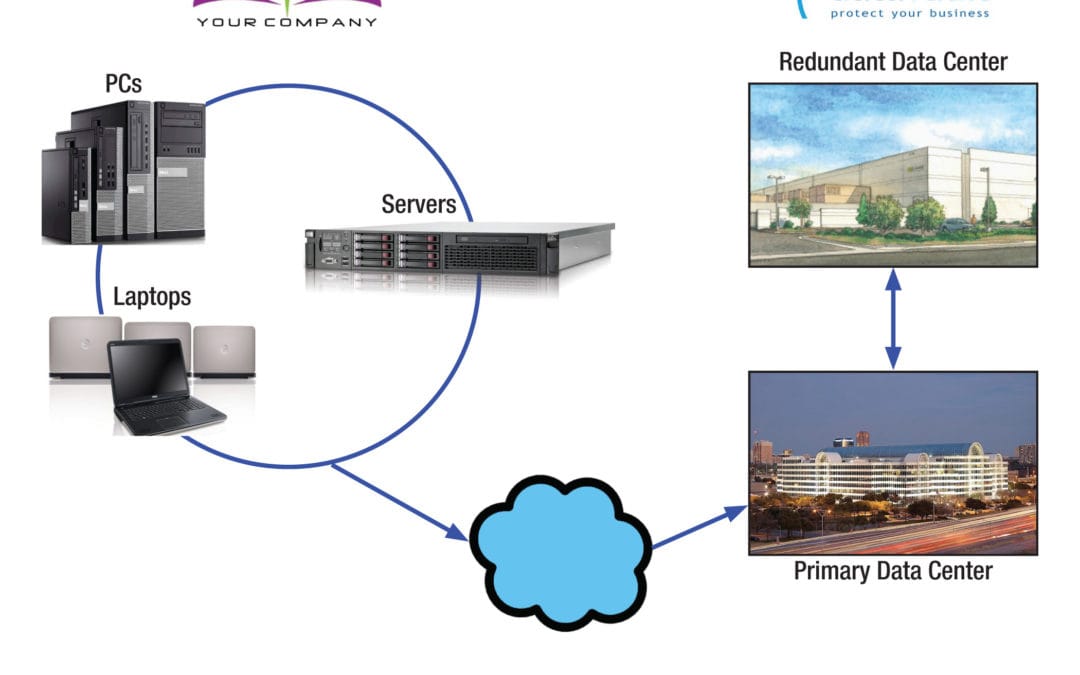

Your data protection audit lays out the plan that enables you to sleep at night knowing that data loss couldn’t destroy your business or be a costly and burdensome event.

In the previous installment of our three part data protection audit series, we looked at the questions you need to ask regarding your data requirements to determine what’s appropriate to include in your data protection audit. Those questions were focused on looking at the functional areas of the business.

In this last installment, we will view the business from the perspective of each business system.

There are three areas of concern:

- Support systems

- Devices

- IT operations

Support systems audit

It’s a great start if you have a server and are backing up data on it nightly or even less frequently, but if you have systems on the backup that have a restore time that’s within 24 hours, you’re likely to lose some data in the gap during a data loss event. You’ll need to have a restore system that will be faster for a practical scenario.

But don’t stop with servers, the same methodology goes for PCs. For example, say a company has one PC that is dedicated to processing credit card transactions, what would happen if that one computer went down? It’s imperative that you have that PC well protected. If you were to have an unexpected hardware failure, not only would transactions be interrupted, you’ll lose transaction data — unless you’re able to restore from a data center and run a virtual PC.

Device audit

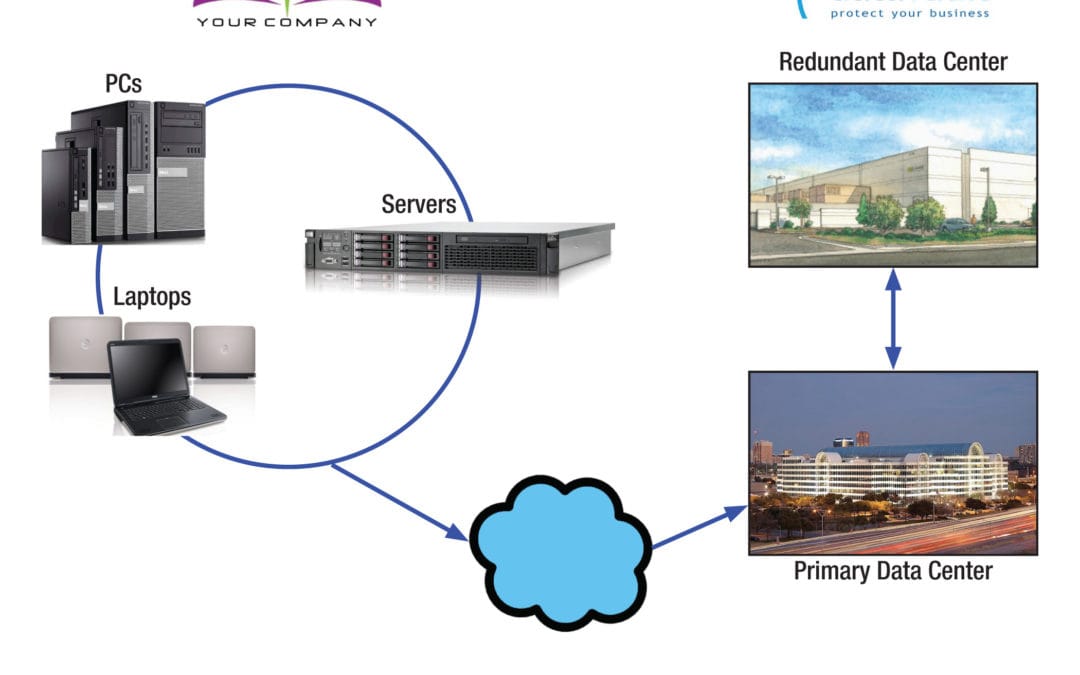

This is the easier part of your data protection audit, but still necessary. Assess every device your company is using — everything that holds data. That includes servers, PCs, storage devices, laptops, tablets, cell phones that are used in your business, etc. Instead of looking at data recovery from strictly a business function perspective, look at it from the device perspective. How would you recover the device if it were gone? What do you have now and what should you have?

Consider this: Backing up PC’s is an important process, and the same goes for laptops. You’ll need to evaluate whether or not the resources used to protect the data on those devices are critical to your business. If you’re a software development company writing applications at your client sites, billing at $200 an hour… well, one guy losing a laptop could cost billable hours for many days! No, it probably won’t ruin a company, but smaller companies would certainly reel from a $5,000 loss due to one lost laptop. And what about the resources lost if you couldn’t recreate the data on that laptop?

IT operations audit

The final part of your audit process will be IT operations where you’ll identify the level of protection needed and perform testing.

No matter the type of technology that you’re evaluating, you need to analyze what your plan is to recover the device today, and what the plan should be in the future. In the end, you will have your complete needs analysis for all your technology company-wide:

- what needs to be protected,

- how to do it, and

- how to restore it.

There’s an unintended benefit from this whole endeavor. What started as a data protection audit actually provided your company a roadmap for the health and resilience of your business. Your data protection audit lays out the plan that enables you to sleep at night knowing that data loss couldn’t destroy your business or be a costly and burdensome event.

How to Plan and Execute a Data Protection Audit Series:

Part One: How to Plan and Execute a Data Protection Audit

Part Two: Data Protection Audit Planning

Part Three: Data Protection Audit – Systems, Devices and IT Operations

by Brian Brignac | Nov 7, 2012 | Archived, Cybersecurity, Disaster Recovery

In part one of “How to plan and execute a data protection audit,” we discussed the importance of user participation in the design process of your data audit plan. In this installment, we go into more detail about the questions you need to ask about your business processes to determine what you need to secure.

When initiating a data protection audit, the starting point is mapping out the systems that make your company work.

The highly respected author of “Faster, Cheaper, Better,” and “Reengineering the Corporation: A manifesto for Business Revolution” Michael Hammer, identified the following key areas of IT systems that likely impact your data and how it travels within your company:

▪ Shared databases, making information available at many places

▪ Expert systems, allowing generalists to perform specialist tasks

▪ Telecommunication networks, allowing organizations to be centralized and decentralized at the same time

▪ Decision-support tools, allowing decision-making to be a part of everybody’s job

▪ Wireless data communication and portable computers, allowing field personnel to work office independent

▪ Interactive videodisk, to get in immediate contact with potential buyers

▪ Automatic identification and tracking, allowing things to tell where they are, instead of requiring to be found

▪ High performance computing, allowing on-the-fly planning and revisioning

Michael Hammer’s definitions allowed him to develop the “Business Process Reengineering Cycle”

which now provides an excellent framework to develop a thorough data protection audit plan.

We’ve detailed the four main steps of every audit below:

Data Protection Audit Step One:

Identify the processes – which business systems would you need to recover after a complete loss?

A couple of examples:

A service business is not selling widgets, so they charge for their time. A key business process will be defining how they capture their time spent per client and the billing system that works from that data. The audit should define how the company generates invoices. Some invoices may be built with more automated systems like an app on employee smartphones or a cloud based storage system, but some inputs may still be the traditional time slips and people entering the information into the system for payroll. The key is that you look at all the applications that get touched, identify what makes it run and ensure they’re part of the data protection plan.

On the other hand, an oil refiner has a process that tracks raw materials coming in and finished goods going out through pipelines/freightliners. This type of company is required by law to trace material points of origin from producers to its logistics system. The oil refinery would need to include the systems and the data capture devices in the field that sit behind these tracking mechanisms.

If you’re a retailer, you’ll need to asses all your process from inventory systems, to time and attendance systems, to scheduling to couponing.

In all industries, the audit team must ask what processes does the business rely on to function and where does the data live?

Data Protection Audit Step Two: Analyze these on an “as-is” basis.

- How would you recover today from a complete loss?

- How long would it take?

- In what condition and how current would the recovered data and systems be?

These questions must be posed:

- How would you recover your data and systems now if everything was gone?

- What would your first step be to build those data and systems back?

- In what condition would the current recovered data be?

- How well would you recover in the event of a significant data loss?

Remember to consider outsourced information. If your company is using smartphone apps, ask who is holding the data and where are the interfaces? You might even have to contact vendors to confirm where your data resides and what protections they have for your information.

As you identify the business processes bit by bit, map out the connections and interfaces that connect with the internal systems.

Data Protection Audit Step Three:

Design the new process. What SHOULD this look like?

At the end of this whole discovery, you will have a list of all your business processes and what your recovery would look like, and the requirements to recovery. Stand back and compare what it would look like and what it should look like. There will be obvious gaps and opportunity for improvement. Those opportunities are where you need to focus your efforts to ensure a sensible recovery.

Data Protection Audit Step Four: Test & Implement

Once you’re comfortable with the outcomes of steps 1 – 3 and chosen your data protection provider or technology, you absolutely must test it. And test it on a regular basis. As companies add new roles, new products, new services, the systems that touch those must be adapted and sometimes those adaptations can alter your data protection program. Regular testing is critical to the success of any data protection plan.

We see many companies and even service providers that do not do these tests. They are not easy! But they are essential – exactly because they are not easy.

In the concluding part of this series, we’ll examine the organization in a different perspective by looking at it from a device and system perspective.

Teams from Squarespace fill buckets with diesel fuel to haul them up 17 stories to the generator keeping the data center online. Staff from Peer 1, Squarespace and Fog Creek Software have formed this unusual Internet bucket brigade. (Photo via

Teams from Squarespace fill buckets with diesel fuel to haul them up 17 stories to the generator keeping the data center online. Staff from Peer 1, Squarespace and Fog Creek Software have formed this unusual Internet bucket brigade. (Photo via